Temporary tables statistics when used in stored procedures

Page Contents

Summary

Sometimes when we design solutions that implement complex business rules we tend to use temporary objects, temporary tables in particular. Decoupling complex queries into the smaller “intermediate” results may help optimiser to come up with a better plan since it needs to resolve simpler queries. It can also make code more readable and maintainable. This approach, of course, needs to be carefully planned since the excessive use of temporary objects may degrade query performances, deviate from set-based design principles, and do more damage than good. Some of the common patterns “When to break down big queries” can be found here.

Depending on the task, we might decide to use temporary tables over temp variables. This may be due to the different scope and/or due to the fact that temp tables are more “robust” and support statistics.

This blog explores specific case scenarios, including temp tables used in stored procedures and the unique behavior of related statistical information that can lead to suboptimal query plans.

Sample data

Let’s create a couple of test tables and a stored procedure that we’ll use throughout the article.

Platform: Microsoft SQL Server 2017 (RTM) Developer Ed.

DROP TABLE IF EXISTS dbo.Transactions --fk constr.

GO

DROP TABLE IF EXISTS dbo.Products

GO

--Products table

CREATE TABLE dbo.Products(

ProductID INTEGER IDENTITY(1,1) NOT NULL

CONSTRAINT PK_ProductID PRIMARY KEY CLUSTERED

,[Name] NVARCHAR(256) NOT NULL

,ListPrice DECIMAL(10,2) NOT NULL

,ModifiedDate DATETIME2

INDEX NCI_Name([Name])

)

GO

--Populate Products table with 500 distinct products

;WITH getRowNums AS

(

SELECT TOP 500

RowNo = ROW_NUMBER() OVER(ORDER BY(SELECT NULL) )

,[Name]

FROM sys.columns c

),getDistribution AS

(

SELECT [Name] = CASE

WHEN rws.RowNo % 5 =0 THEN N'A' -- 100

WHEN rws.RowNo % 99 = 0 THEN N'B' -- 4

WHEN rws.RowNo % 36 = 0 THEN N'C' -- 10

WHEN rws.RowNo % 14 = 0 THEN N'E' -- 27

WHEN rws.RowNo % 499 = 0 THEN 'Z' -- 1

ELSE N'F' --358 products with name that starts with letter 'A'

END + rws.[Name] + CAST(rws.RowNo AS NVARCHAR(3))

,Price = ABS(CHECKSUM(NEWID())) % 44.23

,ModifiedDate = DATEADD(MINUTE,RowNo,SYSDATETIME())

FROM getRowNums rws

)

INSERT INTO Products([Name],ListPrice,ModifiedDate)

SELECT [Name],Price,ModifiedDate

FROM getDistribution

-- check the product names distribution

/*

SELECT [Name Starts With..] = LEFT([Name],1)

,[Distribution] = COUNT([Name])

FROM dbo.Products

GROUP BY LEFT([Name],1)

ORDER BY [Distribution] ASC

*/

-- Order Transactions table

DROP TABLE IF EXISTS dbo.Transactions

GO

CREATE TABLE dbo.Transactions(

OrderId INT IDENTITY(1000,1) NOT NULL

CONSTRAINT PK_OrderId PRIMARY KEY CLUSTERED

,ProductId INT

CONSTRAINT FK_ProductId_ProductsProductId

REFERENCES dbo.Products(ProductID)

,OrderQuantity INT

,ModifiedDate DATETIME2

,INDEX NCI_ProductId(ProductId)

)

GO

--each product was ordered 500 times

INSERT INTO dbo.Transactions(ProductID

,OrderQuantity

,ModifiedDate

)

SELECT ProductID

,Quantity = ABS(CAST(NEWID() AS BINARY(6)) % 10) + 1 --random int between 0-10

,ModifiedDate = SYSDATETIME()

FROM dbo.Products p

CROSS JOIN (SELECT TOP 500 r = 1

FROM sys.columns) x

GO

--stored proc:

--Show the number of orders for products whose names begin with certain letters.

DROP PROCEDURE IF EXISTS dbo.testTempTableStats

GO

CREATE PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

CREATE TABLE #temp(

ProductId INTEGER

,ProductName NVARCHAR(256)

)

INSERT INTO #temp

SELECT pr.ProductID

,pr.[Name]

FROM dbo.Products pr

WHERE pr.[Name] LIKE @StartsWith + '%'

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

GROUP BY t.ProductName

DROP TABLE #temp;

END

The code above is a “complex query” that we decided to decouple by using a temp table. First, we store all relevant products in the temp table and then join the table with a big table in order to calculate the required aggregates. This is one of the common temp tables use cases. We expect our stored procedure to be called with widely varying parameters i.e For all products names that start with ‘B'(4 products) we expect 2000 matching rows that needs to be aggregated (500 orders per product), and for those products whose name starts with ‘A’ (100 products), we expect 25x more -50000.

Since temp tables support statistics and our DB is set up to perform auto create statistics/auto update stats, we also expect to get the good plans for the varying input parameters. In other words we expect query plan to be recompiled if needed – In addition, we also expect query to recompile due to the correctness related reasons (the temp table is created and destroyed every time we run the query)

Test scenario

Run the code from Session1.

DBCC FREEPROCCACHE

GO

EXEC testTempTableStats

@StartsWith = N'C';

GO

Figure 1, The plan created for the first input parameter

As we can see, the plan is good. The temp table Carnality estimates are good, Nested Loop physical operator estimates are aligned with the actual no of rows and the Sort operator was granted enough memory to perform distinct sort.

Now, say another client runs the same stored proc in the context of a different session with a different parameter.

Run the code from Session2

EXEC testTempTableStats

@StartsWith = N'A';

Figure 2, The original plan did not change for the new parameter

Session2 Client gets a horrible plan. Starting from the temp table carnality, everything seems to be off. Nested Loop physical operator estimates are now totally wrong, and the Sort operator does not have enough granted memory to perform the sort operation and needs to spill 231 pages(~2MB) to the disk.

If we execute the same stored proc in the context of a session passing @StartsWith parameter value N’F’, we’ll get the same plan but again with wrong estimates and even worse Sort operator spills.

Analysis

From the previous example, we can see that the first passed parameter dictates the query plan structure. The plan “never changes” for consecutive calls. This looks like a parameter sniffing problem, but our query predicates do not include any parameters.

To investigate and explain this behavior let’s start by monitoring the following parameters:

- Query compilation/recompilation – Using MS Profiler

- Temp table’s automatically created statistics – Using database console command SHOW_STATISTICS.

Set up Profiler

Include the following events in Profiler’s trace

Figure 3, Profiler – events and properties

Add temp table statistic tracking to the query

ALTER PROCEDURE dbo.testTempTableStats

...

..

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

GROUP BY t.ProductName

-- Show automatically created statistics for the column "ProductName"

DBCC SHOW_STATISTICS ('tempdb.dbo.#Temp', ProductName)

WITH STAT_HEADER

,HISTOGRAM;

DROP TABLE #temp;

END

Start the trace and run the stored procedure again.

Note: Altering stored procedure automatically removes existing, cached batch.

EXEC testTempTableStats @StartsWith = N'C';

The query result shows auto-created statistics on the temp table’s ProductName column.

Figure 4, First parameter statistics

Figure 4, First parameter statistics

The plan created is the same as on Figure1

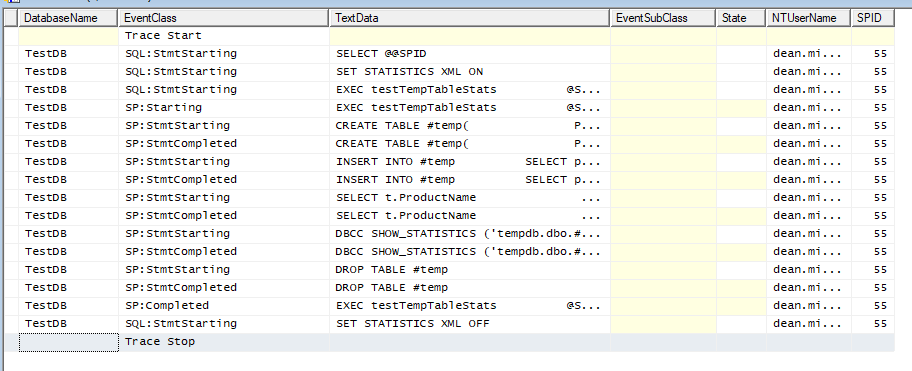

The Profiler trace shows the following sequence of events:

Figure 5, Profiler -The first plan compilation

This plan is exactly what we expected to see.

The unit of compilation and caching is a batch – in this case, the content of our stored procedure. During batch compilation, the individual statements are compiled one after another.

Short detour: What happens when we create a stored proc? After hitting F5(executing CREATE PROCEDURE …), SQL Server parses the procedure definition for syntactical accuracy. If the statements are syntactically correct, the text of the stored procedure is stored in an internal table accessible through the sys.sql_modules system view. At this stage, it is possible to reference non-existing objects within the batch i.e it is possible to include a query that inserts data in a non-existing table, or to select data from a non-existing function. Later, when we execute the stored procedure for the first time, the process known as “Deferred Name Resolution” will check the names of the referenced objects and consequently initiate the Recompile of the query segment which references the objects.

Follow steps 1- 6 on the trace above.

- (not captured by the trace). The execution environment has performed the initial batch compilation. Each query within the batch is compiled separately(Starting from SQL Server Yukon :). The initial compilation was incomplete and only a skeleton plan was created – CREATE TABLE query is the only one fully compiled. The second, INSERT query and the following SELECT query could not be compiled since the temp table did not exist before the very first execution. The skipped compilations are deferred until the execution time.

- Batch execution started and the first query was executed (a brand new temp table was created)

- The second query (INSERT INTO..#Temp) has to be recompiled due to Correctness related reasons. The EventSubClass property describes the cause of Recompilations

-

-

- A new object(temp table) has been created. The query recompile has been initiated.

- Query Optimiser loads all “interesting” statistics that can help build a good plan. The statistic belongs to the tables included in the query. If needed, QO will pause the process to automatically create additional statistics on the columns that can help build the plan*. For this query, QO does not need to auto-create stats.

-

-

- The INSERT INTO …#Temp.. query is now recompiled and executed.

- The third query(the one we are analysing) has to be recompiled due to similar reasons as the previous query. After loading all “interesting” stats – which belong to dbo.Transactions, QO decided to stop the execution process and to auto-create statistics on #temp table columns: ProductId and ProductName

- StatMan – an internal process, that creates auto-stats on the ProductId column(#temp table). The ProductId column is used in the JOIN predicate.

- StatMan creates auto-stats on the ProductName column(#temp table). The ProductName column is used for the grouping. The stat header and histogram are selected in the query output.

Auto stat name convention: _WA_Sys_prefix. * In our example: _WA_Sys_00000002_AA7DA731, 00000002 – Column ordinal position, AA7DA731 – Hexadecimal #Temp table ObjectID.SELECT CAST(0xAA7DA731 as INTEGER) /* Object_id = -1434605775 */ - The SELECT query is now recompiled and executed.

- (Batch execution successfully completed)

*NOTE: _WA – Stands for Waterloo, Canada(Sybase, the Father of Sql Server was developed in Waterloo) . This is mentioned in 70-462.

Clear Profiler’s trace and execute the same stored procedure, this time with parameter ‘A’

EXEC testTempTableStats @StartsWith = N'A';

Strangely enough, the statistical information (header and histogram) is totally wrong. i.e Table carnality should be 100, not 10 and the histogram steps should show product names that start with ‘A’ not ‘C’.

Figure 6 – Profiler, No query recompiles

Figure 6 – Profiler, No query recompiles

The trace shows no recompiles due to schema changes(CREATE TABLE #Temp) and no stat. updates. From the optimality point of view, the Query engine executed the first query(input param ‘C’). The result set is correct, but it seems that everything else is wrong. The first question is why we are missing recompiles due to schema changes (a brand new temp table was created and populated with the new values). The second question is why the auto-created statistics on the temp table were never updated. Is this why our query never gets recompiled due to plan optimality-related reasons.

Temporary tables caching

The temp table caching mechanism may explain the lack of schema-related recompiles in the previous example. This may also explain the stale statistics.

The caching feature was introduced in SQL Server 2005. This functionality provides the caching of temporary objects(temp tables, table variables, and TVFs) across repeated calls of routines(Stored procedures, triggers, and UDFs).

In short, When a stored procedure execution ends, SQL Server truncates* and renames the table, keeping only one IAM and one data page. The structure will be used by the subsequent calls instead of allocating new pages from scratch when the object is created again.

NOTE: For the temp objects smaller than 8MB, the truncation happens immediately after module execution ends. For the larger temp objects, SQL Serer performs “deferred drop” and immediately returns control to the application.

The caching mechanism works for the temp tables that is created by using CREATE TABLE or SELECT INTO statements. The caching is not possible when there is an explicit DDL on a temp table after it is created i.e ALTER #table ADD Constraint, CREATE STATISTICS** on table colums or there is a named constraint on a temp table(this is generally a bad idea since it can lead to constraint name conflict). Temporary tables are also not cached if they are part of dynamic SQL or ad-hoc batch.

NOTE: Statistics created using an explicit CREATE STATISTICS statement are not linked to a cached temporary object. Auto-Created statistics(like the ones presented in the example) are linked to a cached temp object. UPDATE STATISTICS does not prevent temp table caching.

The following query shows that there is one cached temp object currently not in use

SELECT mcc.[name],

mcc.[type],

mcc.pages_kb,

mcc.pages_in_use_kb,

mcc.entries_count,

mcc.entries_in_use_count

FROM sys.dm_os_memory_cache_counters AS mcc

WHERE mcc.[type] = N'CACHESTORE_TEMPTABLES'

name type pages_kb pages_in_use_kb entries_count entries_in_use_count

------------------------ --------------------- -------- --------------- ------------- --------------------

Temp Tables & Table Vars CACHESTORE_TEMPTABLES 16 0 1 0

We track the temp table name by adding SELECT OBJECT_ID(‘tempdb.dbo.#temp’) in the example stored proc. This will show that the temp table object_id never changes. When the stored procedure completes execution, the internal process changes the name of the temp table to hexadecimal form. This will be the case even if we explicitly drop the temp table at the end of the sproc batch.

If a stored procedure is executed concurrently, multiple separate cached objects may be created in tempdb. There is a cached temp object per execution context.

This feature explains why our plan has not been recompiled due to schema changes related reasons. But what about statistics? As previously mentioned, it is expected for the query processor to be able to detect the statistical changes that can affect the cached plan validity and to adapt to it. But it didn’t t happen, why?

Recompilation Reasons

There are many different reasons that can cause a query to be recompiled(EventSubClass Figure 5). The list of all possible recompilation reasons (SP:Recompile event) can be retrieved using the query below.

SELECT xemv.map_key,

xemv.map_value

FROM sys.dm_xe_map_values AS xemv

WHERE xemv.name = N'statement_recompile_cause'

ORDER BY xemv.map_key;

For the purpose of this analysis, we are interested only in Schema changed and Statistic changed reasons. Temp table caching can explain the absence of the former reason.

Updating statistics (both manual and auto-update) may trigger the Optimality(data) related recompilation of the plans that use these statistics.

RT – Recompile thresholds

SQL Server query processor is designed to adapt the cached query plans to the data changes. The changes are tracked by statistical information(histograms) linked to the “interesting” table columns. Statistical information changes may cause query recompilation due to plan optimality-related reasons.

During the query optimisation phase, the Query processor identifies and loads the “interesting” statistics. This helps QP to create good enough plans. In our case, QP loads the following, existing statistics.

- NCI_Name (Statistics on dbo.Products Name column created as a part of the NCI index on the column)

- NCI_ProductId(Statistics on dbo.Transactions ProductId column also created with the NCI index on the column)

- (Auto Created)#temp table auto-created statistics on #temp.ProductName and #temp.ProductId

Use the “undocumented” trace flag to find out the interesting statistics used in our example:

-- use execution plan XML output to find

-- <ModTrackingInfo> tag

DBCC FREEPROCCACHE

GO

DBCC TRACEON(8666)

EXEC dbo.testTempTableStats

@StartsWith = 'C'

GO

DBCC TRACEOFF(8666)

GO

The table contents are constantly changing (INSERT, UPDATE, DELETE, MERGE). SQL Server query processor tracks those changes in order to estimate their impact on the existing cached plans.

For each “interesting statistics” loaded, Query processor creates a snapshot of a counter that counts the number of table modifications. Generally, every table referenced in a query will have such counters loaded.

The table contents are tracked:

- Directly – using table cardinality metadata information

- Indirectly – using statistics on table columns.

RT = f(n), n -number of rows in a table (table cardinality)

Essentially, RT for a table defines the frequency with which queries that refer to the table recompile.

During the first query compilation, for each interesting statistics, a snapshot value of a counter(colmodctr) that counts a number of table modification is stored – a counter per column that participates in the interesting statistics.

Before using an existing cached plan, the Query optimiser performs The threshold crossing test defined by the formula below.

| colmodctr(current) – colmodctr(snapshot) | >= RT

current – refers to the current value of the modification counter

snapshot – refers to the value of the mod. counter captured during the last plan compilation(recompilation).

If the threshold crossing test evaluates to TRUE (the number of changes exceeds the pre-defined RT), for any of the interesting statistics, the query plan is recompiled.

The threshold crossing test will be performed even if the query processor does not load any interesting statistics. In that case, the test will be based simply on table cardinalities.

| cardinality(current) – cardinality(snapshot) | >= RT

The following table shows how RT is calculated for permanent and temporary tables

| Table cardinality | Recompile Threshold | Passing RT test caridnality |

| n<6 | 6 | >= (n+6) |

| 6 <= n <= 500 | 500 | >= (n+500) |

| n > 500 | 500 +0.20*n | >= (n +500 +0.20*n) |

| Table cardinality | Recompile Threshold | Passing RT test caridnality |

| 6 <= n <= 500 | 500 | >= (n+500) |

| n > 500 | 500 +0.20*n | >= (n +500 +0.20*n) |

colmodctr

colmodctr is the name of a counter which counts the number of modifications that a table has undergone. The counter is per column and is non-transnational (if we insert 10 rows and rollback the transaction, colmodctr value will increase by 20). The counter is also ever-increasing.

So far, Temp table caching can explain why our query does not get recompiled due to schema changes. Now, let’s find out why our query use stale statistics and why it does not perform optimality-related query recompilation.

Automatically created statistics are cashed along with the cached temp tables. In this case, auto-created statistics are NOT reset at the end of the stored procedure execution cycle nor at the beginning of a consecutive stored proc. execution.

This is why statistics on a cached temporary table may belong to one of the previous stored procedure calls and are absolutely not related to the context of the current temp table.

If we used a “normal”(dbo.Temp) table instead of the #temp table, the table would be dropped and recreated on each stored procedure call. Consequently, auto-created statistics would be repeatedly dropped and created which would make them always up-to-date.

The modification counters which count changes on the interesting columns, in our example columns: ProductName and ProductId will not cause query recompiles even if the number of the changes passes the RT threshold test. This behavior is unique to the temp tables(Cached or non-cached *) when used within stored procedures.

Note*: To prevent temp table caching, perform a DDL operation on the newly created temp table. e.g

...

CREATE TABLE #temp(

ProductId INTEGER)

ALTER TABLE #temp

ADD ProductName NVARCHAR(256)

..

/* -- this change will cause Err 2767, Could not locate statistics 'ProductName' in the system catalogs

-- starting from the second execution

DBCC SHOW_STATISTICS ('tempdb.dbo.#Temp', ProductName)

WITH STAT_HEADER

,HISTOGRAM;

*/

Err 2667: The statistics will be internally present, but not available through sp_helpstats, DBCC SHOW_STATISTICS, and tempdb.sys.stats. My assumption would be that the auto-crated stats. BLOB entry gets “disconnected” from temp table’s object_id -> For non-cached temp tables, the object_id changes on every sp run.

What is also interesting with the non-cached table is that it does not force our query to be recompiled due to schema change reasons as we might expect.

Back to the original example, to be able to track the modification counters, expand dbo.testTempTableStats stored procedure with the dynamic query below.

--tSQL 2017 syntax

CREATE OR ALTER PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

CREATE TABLE #temp(

ProductId INTEGER

,ProductName NVARCHAR(256)

)

INSERT INTO #temp

SELECT pr.ProductID

,pr.[Name]

FROM dbo.Products pr

WHERE pr.[Name] LIKE @StartsWith + '%'

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

GROUP BY t.ProductName

---sys.dm_db_stats_properties works only of the current db context :(

EXECUTE( N'USE tempdb;

SELECT [Object] = object_name(s.object_id)

,s.[name]

,sp.last_updated

,sp.[rows]

,s.auto_created

,sp.modification_counter

FROM sys.stats s

CROSS APPLY sys.dm_db_stats_properties(s.object_id, s.stats_id) sp

WHERE s.object_id = object_id(''#temp'',''U'');'

);

-- Show automatically created statistics for the column "ProductName"

DBCC SHOW_STATISTICS ('tempdb.dbo.#Temp', ProductName)

WITH STAT_HEADER

,HISTOGRAM;

DROP TABLE #temp;

END

Now execute the query with the parameter ‘C’

DBCC FREEPROCCACHE

GO

EXEC testTempTableStats

@StartsWith = N'C';

GO

Figure 7, colmodctr – modification counters

Figure 7, colmodctr – modification counters

On the first query execution, the query optimiser sets up the RT values for the two auto-created columns. The Recompilation thresholds are based on the temp table’s cardinality (NoOfRows = 10). Based on the previously mentioned formula ( 6 < n < 500, RT = 500 ), in order to pass the RT crossing test we’ll need at least 510(500+10) changes on either of the two columns to initiate query recompilation. This means that, if we execute stored proc 6 times passing parameter ‘A‘ (populates temp table with 100 rows), we will reach 6*100 = 600 changes which are more than we need to pass the test.

EXEC dbo.testTempTableStats

@StartsWith = 'A'

GO 6

The 6th execution shows the following results, but no expected recompile.

Figure 8, colmodctr – modification counters do not initiate query recompiles

The table below shows colmodctr counter’s progress:

Figure 9, modification counter progress

As we can see, the query was not recompiled due to optimality reasons. Non-cached temp tables will show the same behavior. The colmodctr simply does not work for temp tables within stored procedures.

If we repeat the experiment, but this time we pass parameter ‘B'(param ‘B’ results in 4 rows in the temp table) for the very first stored procedure call, we will get a similar output as in Figure 7. RT value is now set to 6, 1<n<6, RT = 6, which means that we need at least 10(6+4) changes on the interesting temp table columns to initiate query recompilation.

If we execute the same stored procedure(with param ‘B’), say two more times, the modification counters will show 16 changes and, as expected, with no recompile.

But if we execute the stored procedure with a parameter that generates 10 or more rows in the temp table, i.e ‘C’ or ‘E’ (10 and 27 rows) our query will recompile.

DBCC FREEPROCCACHE

GO

-- this will set up RT = 10

EXEC testTempTableStats

@StartsWith = 'B'

GO

-- modification counter does not initiate recompiles

EXEC testTempTableStats

@StartsWith = 'B'

GO 2 -- no recompiles

DBCC FREEPROCCACHE

GO

-- this will set up RT = 10

EXEC testTempTableStats

@StartsWith = 'B'

GO

-- a parameter that generates 10 or more rows in temp table do initiate query recompile

EXEC testTempTableStats

@StartsWith = 'C' -- 'E', [A-Z],'A' ...

The experiment shows that the Query processor does track temp table cardinality changes.

The threshold crossing test will be based on temp table cardinality.

| cardinality(current) – cardinality(snapshot) | >= RT

In our case RT =6, the expected current table cardinality is 10, | 10 – 4 | >=6, and the RT crossing test evaluates to TRUE.

The interesting thing about this is if our code uses a temp table that has a relatively stable number of rows i.e ~500, it is likely that we’ll end up with one plan that will never change due to optimality reasons. If our sp creates a query plan for @StartsWith = ‘C’ parameter that sets up RT=500, the query optimizer will expect the temp table’s cardinality to increase to at least 510 rows, which will never happen if our table has a maximum of 500 rows. The difference will be more visible i.e in the case when the Query optimizer sets RT to 500 +20%*n (total number of rows – see the RT tables above). The expected cardinality that will initiate recompile will be n + 500 +0.2*n.

Possible solutions

WITH RECOMPILE

One of the obvious solutions for this problem would be to create a stored procedure WITH RECOMPILE. This will

- Prevent temp table caching

- Prevent stored procedure query plan caching

- Recompile all queries in the stored procedure on each execution.

--tSQL 2017 syntax

CREATE OR ALTER PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

WITH RECOMPLILE

AS

BEGIN

..

This method may work out of the box, but may also be expensive since the whole batch that can contain quite a few complex queries must compile on each call, and then, during the execution, separate queries may be recompiled due to previously mentioned reasons.

EXECUTE .. WITH RECOMPILE

In situation, we know that the cached query plan works for the most common range of input parameters. If we are able to identify those parameters for which the plan would be sub-optimal, we can create a wrapper stored procedure that controls the main stored procedure execution properties.

--wrapper stored procedure

CREATE OR ALTER PROCEDURE testTempTableStatsWrapper(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

SET NOCOUNT ON;

IF @StartsWith IN ('Z','B','C','E')

EXECUTE testTempTableStats

@StartsWith

ELSE

EXECUTE testTempTableStats

@StartsWith

WITH RECOMPILE

RETURN;

END

A stable plan will be cached for the parameters Z, B, C, and E, and a second, non-cached plan will be constructed each time we pass any other parameter value than the four mentioned above.

OPTION RECOMPILE and UPDATE STATISTICS

To overcome the problem of stale statistics, we can add OPTION RECOMPILE to our original query. This will force our query to recompile.

--tSQL 2017 syntax

CREATE OR ALTER PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

..

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

GROUP BY t.ProductName

OPTION (RECOMPILE);

...

If we repeat the first test and pass parameters ‘C’ and ‘F’ respectively, we can see that the “F” param‘s option recompile updates only the temp table’s cardinality, from 10 rows to 358 rows. The process skips updating auto-created statistics on ProductName and ProductId.

DBCC FREEPROCCACHE; GO EXEC dbo.testTempTableStats @StartsWith = 'C' GO EXEC dbo.testTempTableStats @StartsWith = 'F' GO

Figure 10, Stale statistics, correct cardinality

OPTION(RECOMPILE) forces the query to recompile, but it does not detect stale statistics. It also “activates” rowmodcnt (modification_counter). In this case, after the first execution(param ‘C’), RT is set to 500. QO needs to detect at least 510 changes on the significant columns to initiate the recompile. In our case, when the modification counter reaches 510+ changes(Two consecutive calls passing param. “A”), including temp table truncates, QO will update statistics as a part of the query recompilation.

1st Execution Param “C” : RowNo=10: RT=500; modification_counter = 0

2nd Execution Param “A” : RowNo=358: RT=500; modification_counter = 10(truncate) + 358 = 368

3rd Execution Param “A” : RowNo=358: RT=500; modification_counter = 358(Truncate) + 368 = 726** RT crossing test evaluates to TRUE -> Update Statistics.

For non-cached temp tables, OPTION RECOMPILE does update auto-created statistics as well.

We can force the query optimizer to update auto-created statistics using UPDATE STATISTICS #temp. The complete solution would be …

--tSQL 2017 syntax

CREATE OR ALTER PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

CREATE TABLE #temp(

ProductId INTEGER

,ProductName NVARCHAR(256)

)

INSERT INTO #temp

SELECT pr.ProductID

,pr.[Name]

FROM dbo.Products pr

WHERE pr.[Name] LIKE @StartsWith + '%'

UPDATE STATISTICS #temp;

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

GROUP BY t.ProductName

OPTION(RECOMPILE);

---sys.dm_db_stats_properties works only off the current db context :(

EXECUTE( N'USE tempdb;

SELECT [Object] = object_name(s.object_id)

,s.[name]

,sp.last_updated

,sp.[rows]

,s.auto_created

,sp.modification_counter

FROM sys.stats s

CROSS APPLY sys.dm_db_stats_properties(s.object_id, s.stats_id) sp

WHERE s.object_id = object_id(''#temp'',''U'');'

);

-- Show automatically created statistics for the column "ProductName"

DBCC SHOW_STATISTICS ('tempdb.dbo.#Temp', ProductName)

WITH STAT_HEADER

,HISTOGRAM;

DROP TABLE #temp;

END

It is a bit unusual place to put the UPDATE STATISTICS statement since we expect auto-created statistics to be constructed during our query compilation/recompilation process, not before. But then, if we put the statement after the query, UPDATE STATISTICS will not be able to have an effect on the preceding query.

BATCH MODE MEMORY GRANT FEEDBACK (SQL Server 2017+)

This solution is just an experimental one. In SQL Server 2017 Microsoft introduced Adaptive Query Processing, a few new query processing features set to improve query performance. The new features (v1.0) are

- Batch mode memory grant feedback

- Batch mode adaptive joins and

- Interleaved execution

More about this interesting set of features can be found here.

The first two features are available in queries that reference one or more objects with columnstore indexes. Opposed to row-mode execution style, when rows are passed/processed between iterators(operators) one at a time, the batch processing operates on a 64bytes structure(8 pages). The allocated memory space can host between 64 and 900 rows depending on the number of columns selected.

To activate the features(and the batch processing) we can create a “dummy” columnstore index with an int column and LEFT JOIN it with our query. The new join will not change the query logic nor the result-set.

--Create a dummy columnstore index

DROP TABLE IF EXISTS dbo.dummyColumnstore;

GO

CREATE TABLE dbo.dummyColumnstore(i int)

GO

CREATE NONCLUSTERED COLUMNSTORE INDEX nc_csi_id

ON dbo.dummyColumnstore(i)

GO

--add an extra LEFT JOIN to the original query

CREATE OR ALTER PROCEDURE dbo.testTempTableStats(

@StartsWith NVARCHAR(5)

)

AS

BEGIN

CREATE TABLE #temp(

ProductId INTEGER

,ProductName NVARCHAR(256)

)

INSERT INTO #temp

SELECT pr.ProductID

,pr.[Name]

FROM dbo.Products pr

WHERE pr.[Name] LIKE @StartsWith + '%'

SELECT t.ProductName

,NoOfOrders = COUNT(DISTINCT tr.OrderId)

FROM dbo.Transactions tr

INNER JOIN #temp t

ON tr.ProductId = t.ProductId

LEFT OUTER JOIN dbo.dummyColumnstore dcc -- columnstore idx

ON tr.ProductId = dcc.i

GROUP BY t.ProductName;

DROP TABLE #temp;

RETURN;

END

If we run the original test again, first with param ‘C’ and then with param ‘F’, we’ll notice a couple of interesting changes in the query execution plan.

DBCC FREEPROCCACHE; GO EXEC dbo.testTempTableStats @StartsWith = 'C' GO EXEC dbo.testTempTableStats @StartsWith = 'F' GO

The first execution creates a new plan

Figure 11, Adaptive Query Processing features

Figure 11, Adaptive Query Processing features

We can notice a new Adaptive Join operator and a new, batch execution mode for Hash Match Aggregate and Compute scalar operators.

The next execution (param ‘F’) will result in underestimated memory grants for the Hash Match Aggregate operator. This is due to stale statistics, as it was shown earlier.

Now, if we execute the same query again(param ‘F’), the Batch mode memory grant feedback feature will FIX the problem with the operator by allocating enough memory for the operation. This will fix the problem with the Aggregate operator and prevent data spills. However, the original problem with the stale statistics, etc… will stay.

Conclusion

Temporary tables when used in stored procedures may have totally wrong statistics. This can lead to performance degradation due to sub-optimal plans including not enough accurate memory grants, wrong temp table cardinality estimates, etc. This is partially due to the temp table caching mechanism. The feature is extremely useful since it provides more efficient temp table reuse across frequent stored procedure calls, particularly in OLTP workloads. Between stored proc. calls, the column modification counters on interesting temp table columns do not initiate query recompiles ( even if the RT crossing test evaluates to true). However, the RT crossing test that depends on the temp table’s cardinality changes, if evaluated to true, will initiate query recompiles.

Forcing the Query optimizer to perform a statement recompile does not resolve the problem. For the cached temp tables, it fixes cardinality estimates but does not update relevant statistics. For non-cached tables, it resolves the problem (everything is up to date). Combining UPDATE STATISTICS #tempTable and OPTION RECOMPILE is one way to workaround the problem.

The example demonstrates a common scenario when a temp table is used to reduce query plan complexity. The table stores a relatively small number of rows and drives a more complex query. If the cached plan is sensitive to the cardinality and statistical distribution of the values in the temp table, the plan reuse may result in stored procedure performance degradation.

For the larger temp tables with widely differing numbers of rows on every execution, it is likely that the main query recompiles due to temp table cardinality changes.

For the query plans that are less dependent on the temp table statistics and cardinality, we might consider using table variables instead(lightweight, no statistics, cardinality presented as 1row etc.)

Thanks for reading.

Dean Mincic

Figure 6, Stale statistics

Figure 6, Stale statistics

Dean :-

When calculating Price you issued :-

ABS(CHECKSUM(NEWID())) % 44.23

Why bother to include ABS, I would have assumed CHECKSUM will return a positive number.

Other than than, I think you did too much research work for a single blog post.

The man upstairs entrusted you with tremendous gifts, thanks for graciously sharing it.

Happy Thursday.

Best,

Daniel Adeniji

Hi Daniel,

Checksum returns integer data type(signed). It can return negative values for an uniqueidentifier returned by NewId();

As for having too much research, for me personally, that's the fun part.

Regards

Dean :-

As we prepare to submit this for a research paper, going though it with a toothpick.

Please change

SELECT DatabaseName = object_name(s.object_id)

to

SELECT [object] = object_name(s.object_id)

Blessings,

Daniel

Hi Daniel,

Thanks for reading the post. 🙂

You’re right,object_id column in sys.stats system view represents ID of the object to which the statistical information belongs to (SQL Docs, sys.stats).

I’ve changed the alias.

Regards

Is there a way to view the histogram of the statistics that are persisted for these cached objects? I tried doing something like the following but it doesn’t recognize the table name.

DBCC SHOW_STATISTICS (N’tempdb..#Temp’, _WA_Sys_00000001_B12AFDF2)

WITH STAT_HEADER, HISTOGRAM;

Hi Marc,

As mentioned in the article, when the batch that used the cached temp table finishes, the internal process changes the table name e.g from #temp into #BC9C898F

The automatically created statistic stays linked to the cached object.

One way you can retrieve the cached histogram is to

1) find the name of the stored temp table (use SSMS or the query below)

2) use tempTabId and stats_id respectively to get a histogram